In a latest article revealed within the journal Nature, researchers used unstructured scientific notes from the digital well being file (EHRs) to coach NYUTron, a big language mannequin for medical language and subsequently evaluated its means to carry out 5 scientific and operational predictive duties.

Research: Well being system-scale language fashions are all-purpose prediction engines. Picture Credit score: Elnur / Shutterstock

Research: Well being system-scale language fashions are all-purpose prediction engines. Picture Credit score: Elnur / Shutterstock

Background

Initially, all the knowledge wanted to make medical selections is extremely scattered, e.g., in a affected person’s medical data, together with their prescriptions, laboratory, and imaging experiences. Physicians combination all of the related info from this pool of knowledge into handwritten notes, which doc and summarize affected person care.

Current scientific predictive fashions depend on structured inputs retrieved from the affected person EHRs or clinician inputs, complicating knowledge processing, mannequin growth, and deployment. Consequently, most medical predictive fashions are skilled, validated, and revealed, but by no means utilized in real-world scientific settings, typically thought of the ‘last-mile drawback’.

Alternatively, synthetic intelligence (AI)-based massive language fashions (LLMs) depend on studying and deciphering human language. Thus, the researchers theorized that LLMs might learn handwritten doctor notes to unravel the last-mile drawback. On this means, these LLMs might facilitate medical decision-making on the level of look after a broad vary of scientific and operational duties.

In regards to the research

Within the current research, researchers leveraged latest advances in LLM-based methods to develop NYUTron and prospectively assessed its efficacy in performing 5 scientific and operational predictive duties, as follows:

- 30-day all-cause readmission

- in-hospital mortality

- comorbidity index prediction

- size of keep (LOS)

- insurance coverage denial prediction

Additional, the researchers carried out an in depth evaluation on readmission prediction, i.e., the chance of a affected person looking for readmission to the hospital inside 30 days of discharge resulting from any purpose. Particularly, they carried out 5 extra evaluations in retrospective and potential settings; as an example, the workforce evaluated NYUTron’s scaling properties and in contrast them with different fashions utilizing a number of fine-tuned knowledge factors.

In retrospective evaluations, they head-to-head in contrast six physicians at completely different ranges of seniority towards NYUTron. In potential evaluations working between January and April 2022, the workforce examined NYUTron in an accelerated format. They loaded it into an inference engine which interfaced with the EHR and browse discharge notes duly signed by treating physicians.

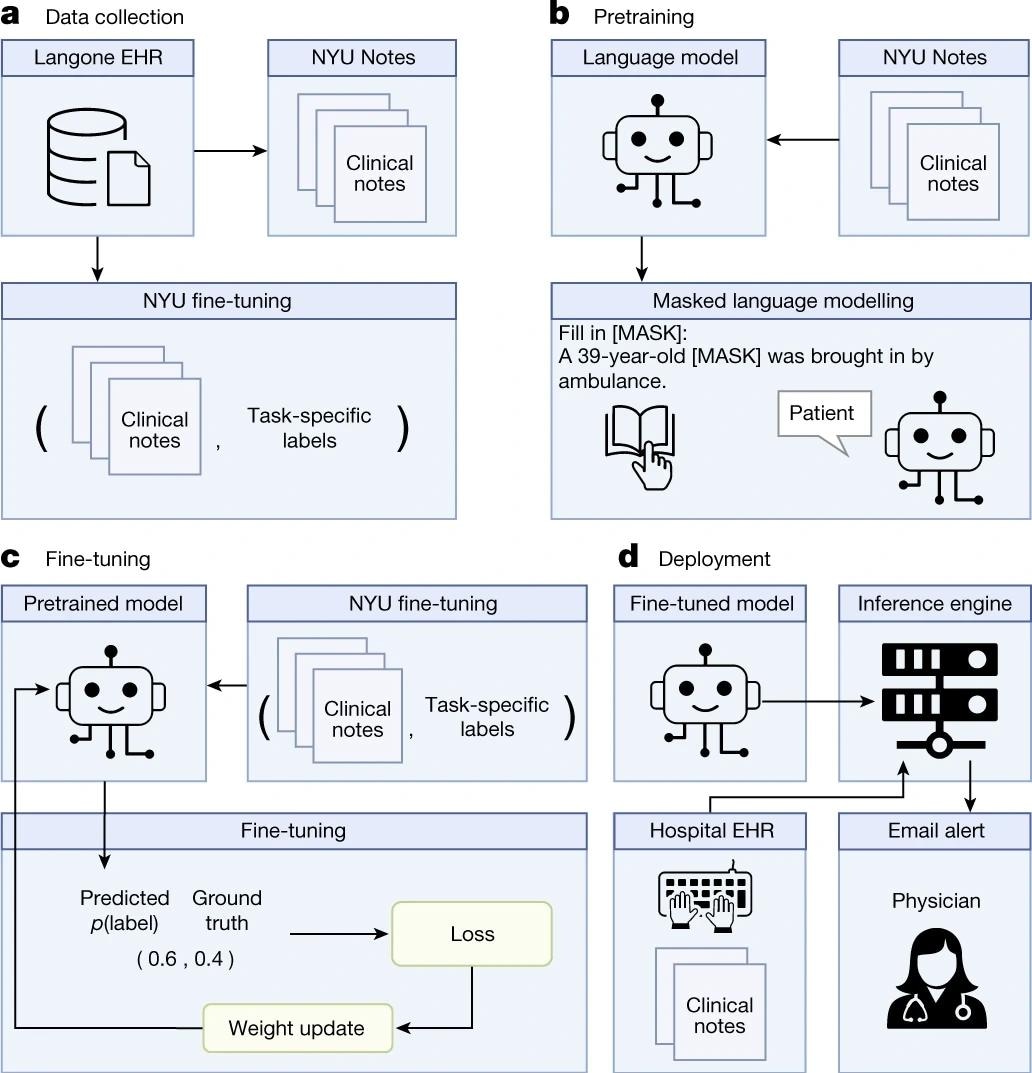

a, We queried the NYU Langone EHR for 2 varieties of datasets. The pretraining dataset, NYU Notes, accommodates 10 years of inpatient scientific notes (387,144 sufferers, 4.1 billion phrases). There are 5 fine-tuning datasets. Every accommodates 1–10 years of inpatient scientific notes (55,791–413,845 sufferers, 51–87 million phrases) with task-specific labels (2–4 lessons). b, We pre-trained a 109 million-parameter BERT-like LLM, termed NYUTron, on your entire EHR utilizing an MLM activity to create a pretrained mannequin for medical language contained throughout the EHR. c, We subsequently fine-tuned the pre-trained mannequin on particular duties (for instance, 30-day all-cause readmission prediction) and validated it on held-out retrospective knowledge. d, Lastly, the fine-tuned mannequin was compressed into an accelerated format and loaded into an inference engine, which interfaces with the NYU Langone EHR to learn discharge notes when they’re signed by treating physicians.

Outcomes

In comparison with prior standard fashions, NYUTron had an total space below the curve (AUC) ranging between 78.7 and 94.9%, i.e., an enchancment of as much as 14.7% regarding AUC. Moreover, the authors demonstrated the advantages of pretraining NYUTron with scientific textual content, which elevated its generalizability via fine-tuning and finally enabled its full deployment in a single-arm, potential trial.

Readmission prediction is a well-studied activity within the revealed literature on medical informatics. In its retrospective analysis, NYUTron carried out higher than a doctor, with a median false optimistic fee (FPR) of 11.11% for each NYUTron and the doctor. Nonetheless, the median true optimistic fee (TPR) was greater for NYUTron than physicians, 81.72% vs. 50%.

In its potential analysis, NYUTron predicted 2,692 of the three,271 readmissions (82.30% recall) with 20.58% precision, with an AUC of 78.7%. Subsequent, a panel of six physicians randomly evaluated 100 readmitted circumstances captured by NYUTron and located that some predictions by NYUTron had been clinically related and will have prevented readmissions.

Intriguingly, 27 NYUTron predicted readmissions had been preventable, and sufferers predicted to be readmitted had been six occasions extra prone to die within the hospital. Additionally, three of the 27 preventable readmissions had enterocolitis, a bacterial an infection steadily occurring in hospitals by Clostridioides difficile. Notably, it leads to the dying of 1 in 11 contaminated folks aged >65.

The researchers used 24 NVIDIA A100 GPUs with 40 GB of VRAM for 3 weeks for pretraining NYUTron, and eight A100 GPUs for six hours per run for fine-tuning. Typically, this quantity of computation is inaccessible to researchers. Nonetheless, the research knowledge demonstrated that high-quality datasets for fine-tuning had been extra beneficial than pretraining. Primarily based on their experimental outcomes, the authors really useful that customers use native fine-tuning when their computational means is proscribed.

Moreover, on this research, the researchers used decoder-based structure, e.g., bidirectional encoder illustration with transformer (BERT), demonstrating the advantages of fine-tuning medical knowledge, emphasizing the necessity for area shift from normal to medical textual content for LLM analysis.

Conclusions

To summarize, the present research outcomes prompt the feasibility of utilizing LLMs as prediction engines for a set of medical (scientific and operational) predictive duties. The authors additionally raised that physicians might over-rely on NYUTron predictions, which, in some circumstances, might result in deadly penalties, a real moral concern. Thus, the research outcomes spotlight the necessity to optimize human–AI interactions and assess sources of bias or unanticipated failures.

On this regard, researchers really useful completely different interventions relying on the NYUTron-predicted threat for sufferers. For instance, follow-up calls are satisfactory for a affected person at low threat of 30-day readmission; nonetheless, untimely discharge is a strict ‘NO’ for sufferers at excessive threat. Extra importantly, whereas operational predictions might be automated solely, all patient-related interventions ought to be carried out strictly below a doctor’s supervision. Nonetheless, LLMs current a singular alternative for seamless integration into medical workflows, even in massive healthcare methods.